Foreman integrates with a bunch of

systems such as DNS (BIND, AD), DHCP (ISC) and Puppet when systems get created

and destroyed. It does most of this via its smart

proxy architecture, where an agent (the proxy) is deployed on your other

servers which gets called from your central Foreman host during

"orchestration".

Most production setups are a bit more complex though with other systems we

don't integrate with in Foreman, so the plugin

support in version 1.1 is there to extend Foreman's capabilities. Since

this means learning some Ruby and Rails, I've released foreman_hooks so admins

can instead write simple shell scripts to hook straight into Foreman's orchestration

feature.

I'm going to walk through a example hook to run a PuppetDB command to

clean up stored data (facts, resources) when hosts are deleted in Foreman.

Foreman 1.2+ installed from RPMs: please use the

ruby193-rubygem-foreman_hooks package from the plugins repo.

Other users: first we'll install the plugin by adding this config file:

# echo "gem 'foreman_hooks'" > ~foreman/bundler.d/foreman_hooks.rb

And then run this to install and restart Foreman:

# cd ~foreman && sudo -u foreman bundle update foreman_hooks

Fetching source index for http://rubygems.org/

...

Installing foreman_hooks (0.3.1)

# touch ~foreman/tmp/restart.txt

Next, we'll create a hook script that runs when a managed host is destroyed

in Foreman:

# mkdir -p ~foreman/config/hooks/host/destroy

# cat << EOF > ~foreman/config/hooks/host/destroy/70_deactivate_puppetdb

#!/bin/bash

# We can only deactivate data in PuppetDB, not add it

[ "x$1" = xdestroy ] || exit 0

# Remove data in PuppetDB, supplying the FQDN

#

# assumes sudo rules set up as this runs as 'foreman':

# foreman ALL = NOPASSWD : /usr/bin/puppet node deactivate *

# Defaults:foreman !requiretty

#

sudo puppet node deactivate $2

EOF

# chmod +x ~foreman/config/hooks/host/destroy/70_deactivate_puppetdb

(on Foreman 1.2, change "host" in the path to "host/managed")

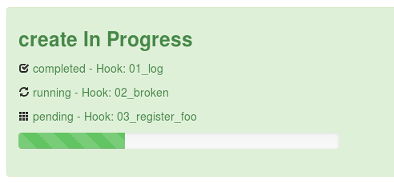

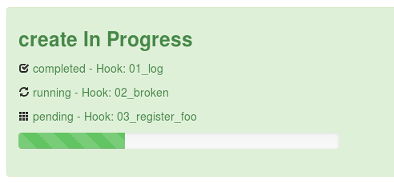

There are a few things here to note. The path we're creating under

config/hooks/ refers to the Foreman object and event (see the README).

For hosts we can use

"create", "update" and "destroy" to extend the orchestration process and there

similar events for every other object in Foreman. The 70 prefix influences

the ordering with other tasks, see grep -r priority

~foreman/app/models/orchestration for more.

The script gets two arguments, the first is the event name ("destroy" etc)

and very importantly for orchestration events, this can change. If an

orchestration task fails, the process will get rolled back so the script will

then be called with the opposite event to the one it was first called

with. For example, a hook in the create/ directory will first be

called with "create", then a later task may fail and it will be called again

with "destroy" to revert the change. Orchestration of DNS records etc in

Foreman works in the same way. Since this example is only able to remove data

and not add it, the first line checks the argument and exits if it isn't asked

to destroy. Other scripts should take note of value of this argument.

The second argument is the string representation of the object, i.e. the

FQDN for host objects. On stdin, we receive a full JSON representation which

gives access to other attributes. There are helpers in hook_functions.sh to

access this data, see examples/

to get a copy.

Lastly in this example, we run the PuppetDB command to remove the data. The

exit code of orchestration hooks is important here. If the exit code is

non-zero, this will be treated as a failure so Foreman will cancel the

operation and roll back other tasks that were already completed.

Now when the host gets deleted from either the Foreman UI or the API, the host gets deactivated in

PuppetDB:

# puppet node status test.fm.example.net

test.fm.example.net

Deactivated at 2013-04-07T13:39:59.574Z

Last catalog: 2013-04-07T11:56:40.551Z

Last facts: 2013-04-07T13:39:30.114Z

There's a decent amount of logging, so you can grep for the word "hook" in the

log file to find it. You can increase the verbosity with

config.log_level in

~foreman/config/environments/production.rb.

# grep -i hook /var/log/foreman/production.log

Queuing hook 70_deactivate_puppetdb for Host#destroy at priority 70

Running hook: /usr/share/foreman/config/hooks/host/destroy/70_deactivate_puppetdb destroy test.fm.example.net

One area I intend on improving is being able to interact with Foreman from

within the hook script. At the moment, I'd suggest using the foremanBuddy command line

tool from within your script - though you shouldn't edit the object from within

the hook, unless you're using the after_create/after_save events.

I hope this sets off your imagination to consider which systems you could

now integrate Foreman into, without needing to start with a full plugin.

Maybe we could begin collecting the most useful hooks in the community-templates

repo or on the wiki.

(PuppetDB users should actually use Daniel's dedicated puppetdb_foreman

plugin. I hope development of dedicated plugins down the line will happen as a

natural extension.)